Developing a unified, low-cost, self-care mobile health application for common disease screening and early detection in low-and middle-income countries

Motivation: Noncommunicable diseases (NCDs) such as cardiovascular diseases, chronic respiratory diseases, and neurodegenerative diseases kill about 41 million people every year and cause the majority of global disease burden and modality, equivalent to 74% of all deaths globally (WHO, 2022). Most of these premature deaths occur in low- and middle-income countries. Among NCDs, heart attacks and strokes account for most NCD deaths, or 17.9 million people annually, followed by chronic respiratory diseases (4.1 million). In addition, common neurodegenerative diseases such as Alzheimer’s and Parkinson’s diseases affected about 29.8 million and 6.2 million people worldwide, respectively (Vos, Theo, et al., 2016). WHO also reported that the rate of CVDs is increasing in developing countries, including Vietnam (WHO, CVD in Vietnam). In 2018, the Vietnam National Heart Association reported that Vietnam currently has about 25% of the population suffers from CVDs and 46% from hypertension, and the rate in Vietnam is getting younger and younger in the coming years (IVIE, 2022). In reality, NCD diseases are often delayed or misdiagnosed, even undiagnosed (Florencia Luna et al., 2020). For example, over 1 in 2 adults living with diabetes are undiagnosed, and over 3 in 4 adults with diabetes live in low- and middle-income countries (IDF Diabetes Atlas, 2022). Therefore, early screening, diagnosis, and treatment of NCDs play a crucial role in enabling timely treatment, preventing disease progression, and reducing morbidity and mortality. However, it will be a big challenge to screen patients at scale who live in rural areas with limited healthcare facilities and might not have access to physicians or medical services. A major barrier to screening under-resourced populations is the lack of low-cost and accurate diagnostic tools (P. Fernandes and S. Brozak, 2020). Existing diagnostic platforms are expensive, invasive, or require trained healthcare workers to operate, making them infeasible for deployment in resource-limited settings. AI-based mobile health applications present a great solution to tackle this challenge (Sarker, Iqbal H., et al., 2021). Advances in mobile health (mHealth) have helped health professionals in preventing and early detection of diseases, remote diagnosis, and self-care management (Wernhart, Anna, 2019). By collecting large-scale, multiple modalities of data from smartphone sensors and wearable devices and then leveraging machine/deep learning algorithms to analyze these data, such technologies enable inexpensive mobile screening of many medical conditions and they can operate in limited healthcare facilities in low-middle-income countries like Vietnam. When linked to care providers and medical experts, these tools could identify common diseases earlier and could help improve health outcomes.

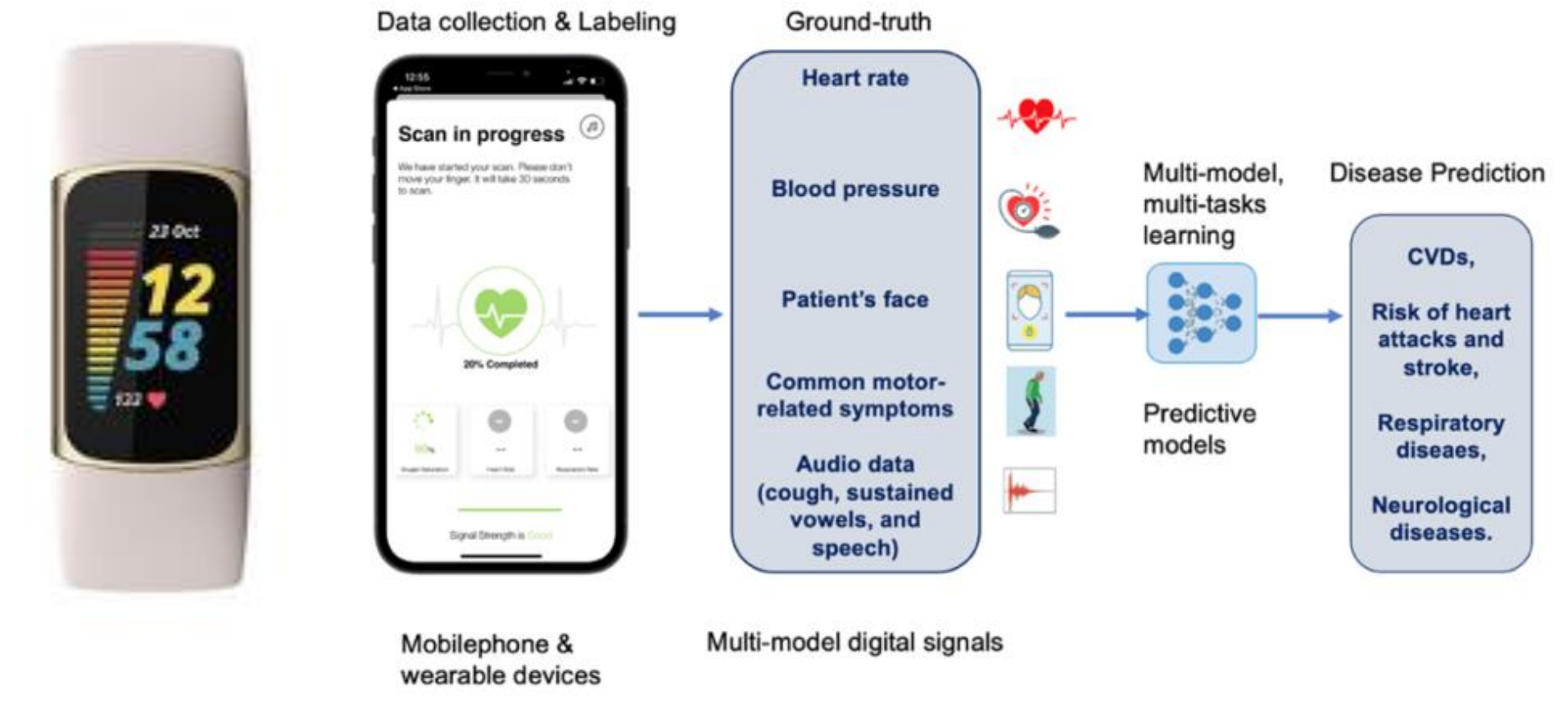

Objective: This work aims to develop a low-cost, unified machine learning-based screening tool using multimodal signals collected from smartphones/wearable devices to evaluate the risk of presenting with common, high-demanding NCDs including stroke, chronic respiratory diseases, and neurodegenerative diseases (see Figure 1). We will clinically evaluate the app for common disease screening and early detection in low-and middle-income countries. This tool can be used at home or the point of care and opens up the opportunity to bring digital healthcare solutions to millions of people across countries.

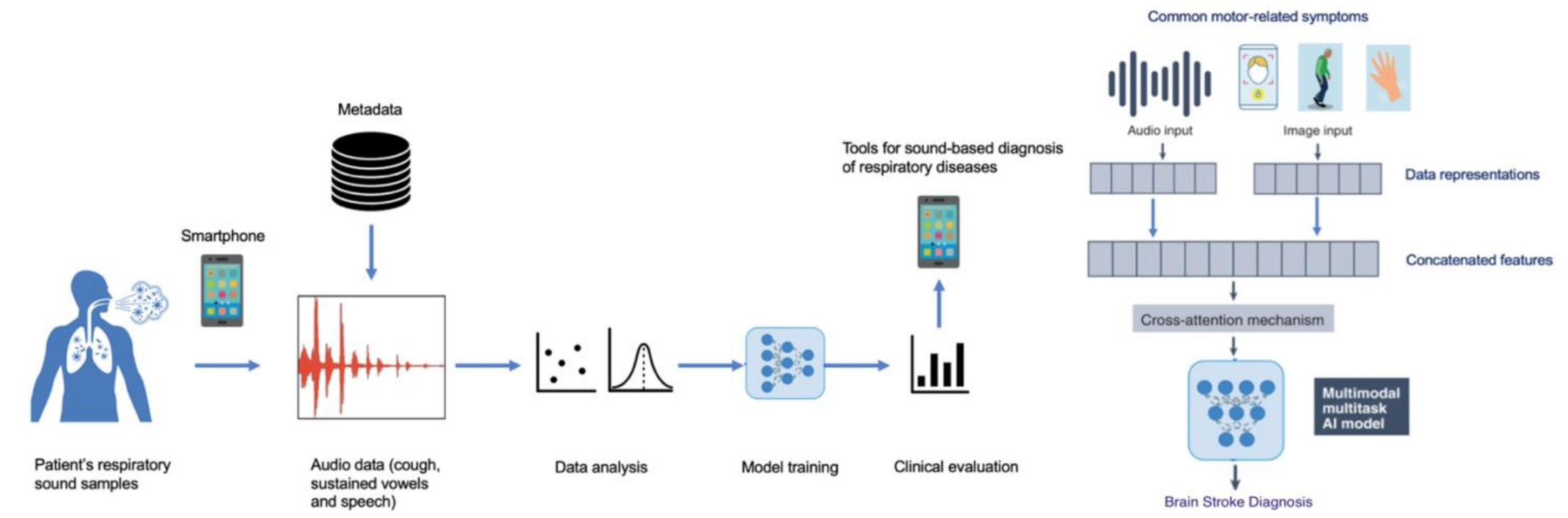

[Aim 1] Develop and clinically evaluate a multimodal AI system for predicting the risk of common heart diseases and stroke. Heart rate is considered a key risk factor for CVDs (Perret-Guillaume et al. 2019). The variability in the time interval between heartbeats may reveal patterns that are typical of heart-related pathologies. For example, detecting subtle changes in heart rate behaviors allows the investigation of some types of abnormal heart function. In this proposal, we focus on using multiple modalities of biosignals collected from smartphones and wearable devices (Google Fitbit) and apply machine learning algorithms to detect possible heart-related pathologies, in particular common heart diseases and stroke. Heart rate, blood pressure, SpO2, and speech of patients are collected and annotated to train a multimodal predictive model (see Figure 2b). The AI system will be then clinically evaluated and could make screenings more accessible and facilitate early treatment to prevent stroke. As a result, cardiac patients and doctors could use at-home recordings to check for sudden or significant changes.

Figure 1. Overview of our approach. Smartphones, wearable devices, and mobile applications are used to collect multiple data modalities concerning patient health conditions. Specifically, heat rate, blood pressure, SpO2, patients’ faces, and their common motor-related symptoms, as well as audio data (cough, sustained vowels, speech), will be collected, normalized, and annotated by medical experts. The data is then used to train AI-based models to predict the risk of common non-communicative diseases such as CVDs, heart attacks, stroke, respiratory and neurological diseases.

[Aim 2] Analyze microphone-captured audio data for the detection of common respiratory diseases. A novel technique for machine learning-based estimation of patient respiratory rate from audio will be developed. Audio signals include cough, sustained vowels, and speech (Sharma, Neeraj, et al., 2020). Cough signals code the state of the respiratory system and the pathologies involved (Shi, Yan, et al., 2018), and changes can reflect pathological situations (Lavorini, Federico et al., 2020). Methods have been developed for automated recognition from cough of respiratory diseases such as pneumonia (Kosasih, Keegan, et al., 2014), asthma (Zolnoori, Maryam et al., 2012), croup (Sharan, Roneel V., et al., 2018), and COPD (Sharan, Roneel V., et al., 2018); cough is predictive of spirometry readings for monitoring asthma severity (Rao, MV Achuth, et al. , 2017) because the mechanism of cough generation and the forced expiratory manoeuvre of spirometry are similar (Er, Orhan et al., 2008). Sustained vowel sounds are used to diagnose lung capacity and increased inspiration time during speech is diagnostic of lung disease (Lee, Linda, et al., 1993) including asthma (Levy, Mark L., et al., 2006). Acoustic features will include neural bottleneck features designed for speaker diarization (Sarı, Leda, et al., 2019), articulation rate (Bhat, Suma et al., 2010), effort (Pietrowicz et al., 2015), and auditory roughness (He, Di, et al., 2017). Regularized neural architectures will input audio features and output estimated SpO2 (continuous), respiratory rate (continuous), and pulmonary disorder (categorical). Sequence-to-sequence networks will estimate intrinsic semantic features like type, duration, and frequency of cough and speech breathing. Our latest work on analyzing microphone-captured audio data for the detection of respiratory diseases has been introduced in (Harvill, John, et al., 2022). To improve the performance of the system, we also consider combining sound data with text data that contains metadata of patients.

Figure 2. (a) The main components of the proposed machine learning system for detecting respiratory diseases from sound data collected by smartphone; (b) Illustration of the key components in the proposed multimodal AI architecture for brain stroke diagnosis.

[Aim 3] Develop a mobile, self-guided quantitative motion analysis app to detect early risk factors of neurological diseases (Parkinson’s and Alzheimer’s disease). We will develop a multimodal AI architecture that incorporates data across modalities (voice, human postures, and movements) to detect early risk factors of common neurological diseases (e.g., Parkinson’s and Alzheimer’s disease). In particular, the machine learning system takes patient voice samples, and patient poses/postures/gaits provided by smartphones as input and then learns to produce risk factors for neurological diseases.

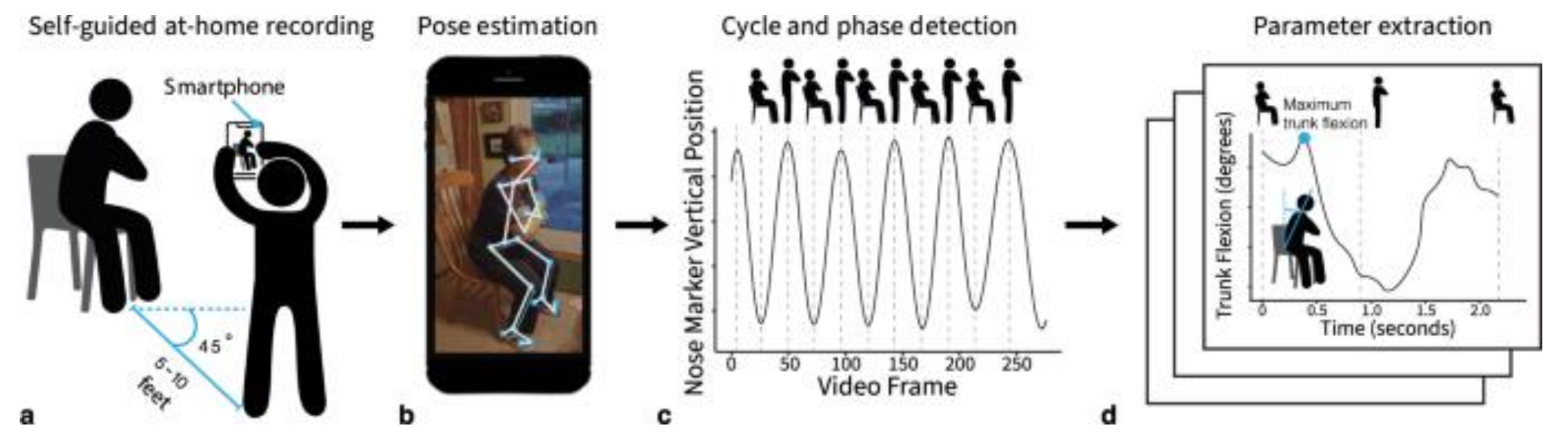

Figure 3. An example of a mobile application to collect and analyze movement data was presented by Boswell et al. 2023. This is a self-guided quantitative motion analysis of the widely used five-repetition sit-to-stand test using a smartphone. The quantitative movement parameters extracted from the smartphone videos were related to a diagnosis of osteoarthritis, and physical and mental health. This finding demonstrated that at-home movement analysis goes beyond established clinical metrics to provide objective and inexpensive digital outcome metrics for nationwide studies. Inspired by this study, we will develop a mobile app that incorporates data across modalities (voice, human postures, and movements) to detect early risk factors of common neurological diseases and provide clinical insights that help medical doctors in early detection and treatment.

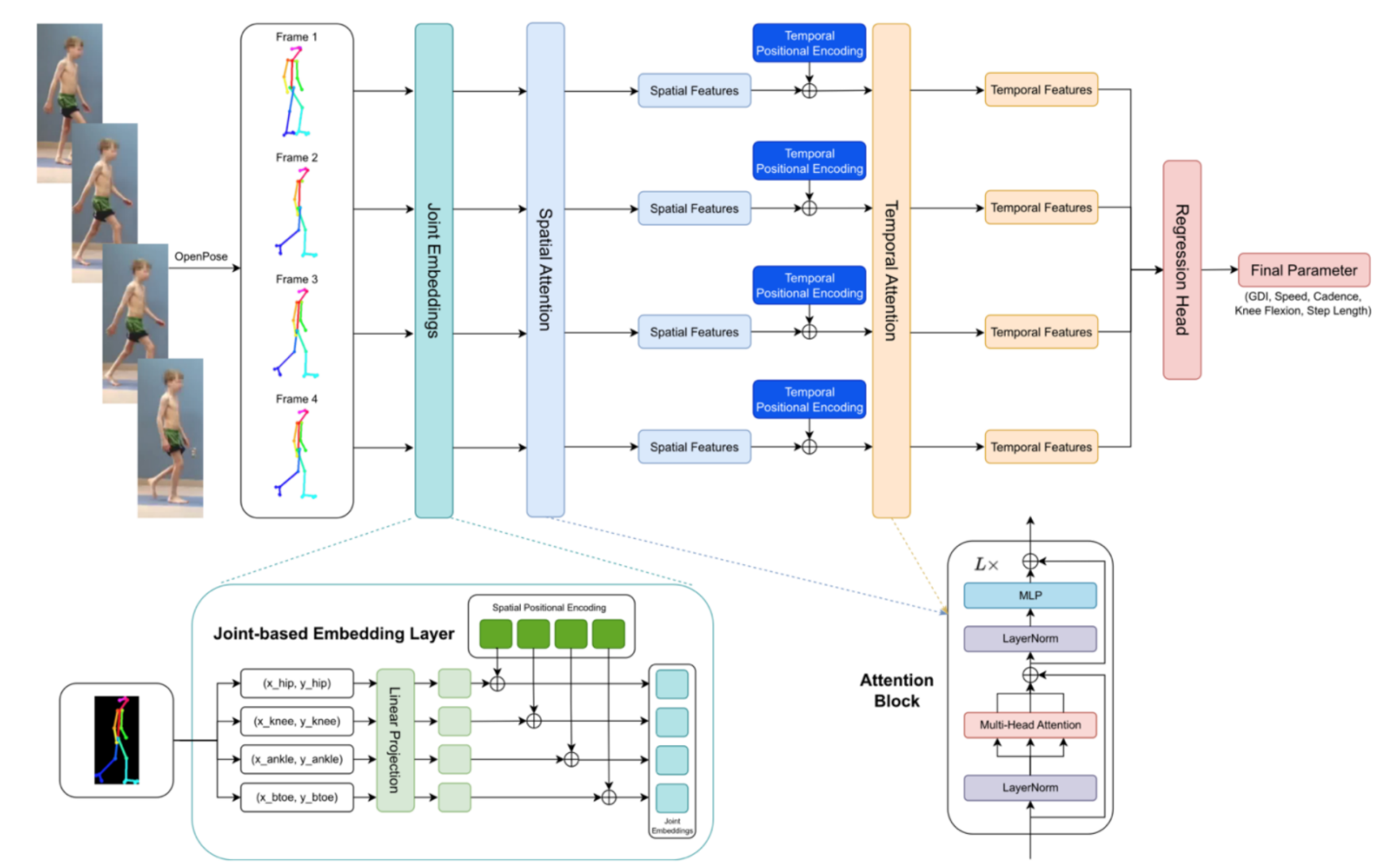

Figure 4. This is our preliminary study conducted at the Vinuni-Illinois Smart Health Center by Dr. Hieu Pham on a novel spatiotemporal deep learning network to estimate critical gait parameters from RGB videos captured from a single-view mobile phone camera. Empirical evaluations on a public dataset of cerebral palsy patients indicate that the proposed framework surpasses current state-of-the-art approaches and shows significant improvements in correlation and prediction error when predicting general gait parameters (including Walking Speed, Step Length, Gait Deviation Index – GDI, and Knee Flexion Angle at Maximum Extension). We will extend this work by incorporating more data modalities to improve the change of detecting early risk factors of common neurological diseases.

Detailed Research Plan and Methods

Preliminary data: Acquiring high-quality data that is fully annotated with correct diagnoses is the key to the success of this project. Thanks to internal funding from VinUni, we have started the plan to collect 100 subjects from the Vinmec Times City International Hospital and other major hospitals in Vietnam, such as Hanoi Medical University Hospital and Hospital 108. To protect data privacy, we will perform de-identification of all sensitive information of the subjects. To collect the data, we have developed a mobile app that integrated Google Fitbit. This app allows us to collect various biomedical signals including heart rate, blood pressure, SpO2, patients’ faces, and their common motor-related symptoms, as well as audio data (cough, sustained vowels, speech). In addition, the research team will get access to other countries’ data, probably US datasets where Professor Mark Hasegawa-Johnson (UIUC) will be able to access some of the datasets that contain the speech data of the patients. The datasets above will be used to train and validate machine/deep learning algorithms. The study protocol will be asked for approval by the Institutional Review Board of these hospitals. All data samples will be de-identified before any processing steps. The ground truth will be annotated by a group of experienced specialists. We are working closely with a network of 30 medical doctors in Vietnam who can provide services for data collection and annotation.

Algorithms development

State-of-the-art machine/deep learning approaches (Borhani, Yasamin, 2022; Chen, Ye, et al., 2021; Julián N Acosta. Al, 2022; Aytekin, Idil, et al., 2022; Li, Xiangyu, et al., 2021; Hong, Weixiang, et al., 2022) will be explored for representation learning tasks. In addition, we also aim to develop novel deep-learning algorithms to tackle the challenges of the proposal. Most machine learning and deep learning applications in healthcare have addressed narrowly defined tasks using one data modality (Pranav Rajpurkar et. al, 2022). In contrast, medical experts treat health data from multiple sources and modalities when diagnosing and deciding treatment plans. Ideally, AI algorithms should be able to access and learn from all data sources available (as medical experts) to boost their performance. In this proposal, we aim to develop new deep learning architectures that allow take multiple modalities of data as input (Julián N Acosta. Al, 2022) and then learn a multimodal robust representation of the data (Hai Pham et. Al, 2019; Yu Huang et al, 2021; Peiguang Jing et. al, 2021; Angelo Cesar Mendesda Silva et. al, 2022) for prediction tasks.

Model evaluation: To evaluate the proposed approaches, we will conduct both internal evaluations using in-house datasets and external evaluations using public datasets. Clinical assessment will also be performed in which medical experts join in evaluating the AI performance. The performance of the proposed AI systems is determined by their accuracy, precision, recall, F1-score, ROC curves, and AUC (area under the receiver operating characteristic curve).

Clinical evaluation: For clinical evaluation, we make use of clinical experts’ opinions to evaluate the specificity, sensitivity, and reliability of such models. To this end, we will compare the performance of the proposed AI systems with that of clinicians with different levels of expertise (junior and senior doctors). We also investigate the impact of mobile apps on patient outcomes.

Expected outcome and impact on wellness/health: The majority of the previous studies have focused on the applications of AI within the traditional hospital environment. However, the COVID-19 pandemic indicates that one major challenge is the lack of access to physical exams, including accurate and inexpensive measurement of remote vital signs. Smartphone-based AI screening by frontline health workers provides an opportunity to increase early detection of disease in the community and to target high-risk populations and those who do not have access to primary care. Overall, community-based screenings could substantially reduce morbidity from many diseases that benefit from early detection. Smartphone-based AI technologies hold promise for improving the diagnosis of diseases in resource-limited settings if they are carefully integrated into health systems. Empowering community health workers with smartphone AI technologies could bring preventative screening and diagnosis to low-resource settings. This project will also contribute a multimodal dataset for common disease screening and early detection. This will be the first dataset in Vietnam that provides multiple modalities. In addition, we will open-source our data collection and analysis tools to the community to help advance the development of the file of digital health care in Vietnam and worldwide.

Qualifications: Our multidisciplinary project requires expertise in many fields from computer vision to speech analysis, and neuroscience. Such a combination will lead us to creative and high-impact research. Mark Allan Hasegawa-Johnson, is a Professor of Electrical and Computer Engineering at UIUC and Director of the Statistical Speech Technology Group. He has extensive experience in speech information processing. He is also a Fellow of the IEEE, for contributions to speech processing of under-resourced languages, and a Fellow of the Acoustical Society of America, for contributions to vocal tract and speech modeling. Minh Do is a Professor in Signal Processing and data Science in the Department of Electrical and Computer Engineering at UIUC. Prof. Do has extensive experience in signal processing, computational imaging, machine perception, and data science. Hieu Pham is an Assistant Professor with >7 years of experience in Computer Vision and Medical Imaging Analysis. Dinh Nguyen is a signal processing expert at VinUniveristy. Huyen Nguyen has many years of experience working on telehealth and is a mobile health expert. Professor, M.D., Vo Thanh Nhan is currently the Director of the Cardiovascular Center at Vinmec Times City International Hospital. Dr. Ngoc-Minh Ho is the Director of the 3D Motion Lab, Orthopaedic and Sports Medicine Center, Vinmec International Hospital. These medical experts have strong expertise in cardiovascular diseases and common neurodegenerative diseases. Nghia Nguyen, MD, is the Deputy Director of the Integrated Mental Health Care Center, at Vinmec Healthcare System.

Plan to recruit and co-advise Ph.D. students: This project opens many research topics, for which we plan to recruit 5-6 Ph.D. students for a 5-year period. These students will be collaboratively advised by UIUC and VinUni faculty in the areas of machine learning, computer vision, speech processing, and bioengineering. The research topic for each Ph.D. student will be identified by the three research objectives above.

- [Research Objective 1] Develop and clinically evaluate a multimodal AI system for predicting the risk of common heart diseases and stroke [02 Ph.D. student].

- [Research Objective 2] Analyze microphone-captured audio data for the detection of common respiratory diseases. Develop a novel technique for machine learning-based estimation of patient respiratory rate [02 Ph.D. student].

- [Research Objective 3] Develop a mobile, self-guided quantitative motion analysis app to detect early risk factors of neurological diseases (Parkinson’s and Alzheimer’s disease) [01 Ph.D. student].

Plan on the use of $20K + $20K to support each Ph.D. student and their project: Research funding that the research team will receive will be used to cover the following:

- Costs of data collection and labeling.

- Costs for mobile/wearable devices and developing annotation frameworks

- Conference registration and publication fee.

- Cost of research visit-related travel, accommodation, and subsistence between VinUni-UIUC and UIUC-VinUni.

Future Extensions: The success of this study opens new opportunities to use commodity smartphones to build various applications to support complete, accurate, yet reliable health tests in regular home settings. These applications allow measures of multiple health parameters and measurements via visual and acoustics sensing. Clinical studies to see if the tools can be reliable for disease tracking and evaluation out of the clinic. The research team expects to apply for external funding from VinIF or NSF grants to support an extensive clinical validation of the proposed AI systems by comparison against current diagnostic technology. This plan can be conducted at Vinmec Hospital and other major hospitals in Vietnam. We also have a plan to work with our clinical partners in the US through UIUC faculty, thus sustaining this multidisciplinary research collaboration between VinUni and UIUC. An important goal is to demonstrate our AI systems as accurate tools to contribute to the early diagnosis and screening of common non-communicable diseases that can work effectively across countries. Finally, this proposal provides a great opportunity to explore technology transfer activities and anticipate that these devices will contribute to improving the current standards of patient care.